Recap

KKT, Lagrangian

\[L(w, b, \alpha, \beta) = f(w,b) + \sum_{i=1}^{N}{\alpha_ig_i(w,b)} + \sum_{i=1}^{N}{\beta_ih_i(w,b)}\]

\[\theta(w,b) = \max_{\alpha, \beta} L(w, b, \alpha, \beta) \]

\[\theta(w,b) = \begin{cases} f(w,b) \text{\: if feasible} \\ \infty \text{\: otherwise} \end{cases}\]

\[\min_{w,b}\theta(w,b) = \min_{w,b}\max_{\alpha, \beta, \alpha_1 \geq 0} L(w, b, \alpha, \beta) \]

if \(f, g\) are convex, ?affine

\[ \min_{w,b}\max_{\alpha, \beta, \alpha_1 \geq 0} L(w, b, \alpha, \beta) = \max_{w,b}\min_{\alpha, \beta, \alpha_i \geq 0} L(w, b, \alpha, \beta) \]

Constraints, partial derivatives of \(w, b, \beta_i, \alpha_i\) are all \(0\).

SVM-primal

\[\min_{w,b} \frac12 \|w\|^2 \\ \text{s.t. \quad} l^i(w^Tx_i+b) \geq 1 \text{\quad for i=1, ...N}\]

\[g_i(w,b) = 1-l^i(w^Tx_i+b) \leq 0\\ \to L(w, b, \alpha) = \frac12 \|w\|^2 - \sum_{i=1}^m{\alpha_i (l^i(w^Tx_i+b-1))} \]

\[\nabla_wL(w, b, \alpha) = w - \sum_{i=1}^{m}\alpha_il^ix^i=0 \\ \to w = \sum_{i=1}^{m}\alpha_il^ix^i\]

\[\nabla_bL(w, b, \alpha) = \sum_{i=1}^{m}\alpha_il^i = 0 \]

Put back to lagrangian,

\[ \begin{aligned} L(w,b,\alpha) &= \frac12 \|\sum_{i=1}^{m}\alpha_il^ix^i\|^2 - \sum_{i=1}^m{\alpha_i (l^i(w^Tx_i+b-1))} \\ &= -\frac12 \|\sum_{i=1}^{m}\alpha_il^ix^i\|^2 + \sum_{i=1}^m{\alpha_i} \end{aligned}\]

Then the goal is

\[\max -\frac12 \|\sum_{i=1}^{m}\alpha_il^ix^i\|^2 + \sum_{i=1}^m{\alpha_i} \\ \alpha_i \geq 0, \quad \sum_{i=1}^N{\alpha_il^i}=0\]

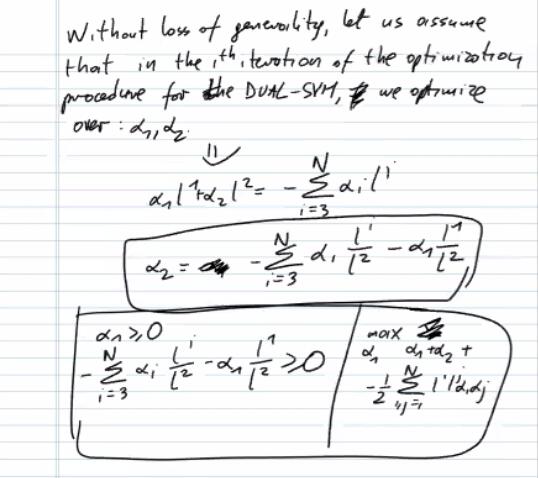

Without loss of generality, let us assume that in the \(i^{th}\) iteration of the optimization procedure for the DUAL-SVM, we optimize over: \(\alpha_1, \alpha_2\)

\[\alpha_1l^1 + \alpha_2l^2 = -\sum_{i=3}^N{\alpha_il^i}\]

\[\alpha_2 = \frac{-\sum_{i=3}^N{\alpha_il^i} - \alpha_1l^1 }{l^2} \]

Each step freeze 2, optimize others. (Heuristic)

Kernel Ridge Regression

\(\{x_i\}, \{y_i\}\)

Cost function (linear) \[ C(w) = \frac12 \sum_{i=1}^N{(y_i - w^Tx_i)^2} \]

\[H: w^Tx + b = 0\]

\(x_i' = x_i \text{\: concating 1}\)

Optimization for standard linear regression is just minimize \(C(w)\)

In ridge regression,

\[\min_w C(w) = \frac12 \sum_{i=1}^N{(y_i - w^Tx_i)^2} + \frac12 \lambda \|w\|_2^2\]

\[\nabla_{w^*}C(w^*) = 0\]

\[w^* = \big(\lambda I + \sum_{i=1}^N{x_ix_i^T}\big) ^{-1}\big(\sum_{j=1}^N{y_jx_j^T}\big)\]

- Time complexity:

- each \(x_ix_i^T\) is a matrix in \(\mathbb{R}^{d\times d}\),

- \(\sum_N x_ix_i^T\) takes \(Nd^2\)

- \(\big(\lambda I + \sum_{i=1}^N{x_ix_i^T}\big) ^{-1}\) takese \(d^3\)

Approximate kernel Method \[x_i \to \phi(x_i)\]

\[w = \big(\lambda I + \sum_{i=1}^N{\phi(x_i)\phi(x_i)^T}\big) ^{-1}\big(\sum_{j=1}^N{y_j\phi(x_j)^T}\big)\]

Matrix Identity

P, B, R

\[(P^{-1} + B^TR^{-1}B)^{-1}B^TR^{-1} = PB^T(BPB^T+R)^{-1}\]

\(B\in\mathbb{R}^{r\times p}\)

\[B^TR^{-1} = (P^{-1} + B^TR^{-1}B)PB^T(BPB^T+R)^{-1}\]

\[B^TR^{-1}(BPB^T+R) = (P^{-1}+B^TR^{-1}-B)PB^T\]

\[w = (\lambda I +\phi\phi^{-1})\phi_y = \phi(\phi^T\phi + \lambda I)^{-1}y\]

\[\phi(X)^T\phi(X) \in \mathbb{R}^{N\times N}\]

Predictions

\[x\in\mathbb{R}^d, y=w^T\phi(x)\]

number of random features \[m = \theta(d\log d)\]

G: Gaussian unstructured matrix \(\in \mathbb{R}^{d\times d}\)

- Get Gaussian Orthogonal Matrix

- Gram-schmidt orthogonalization approach:

- rows of l2-norm 1

- Apply sample scalars: s1, ..., sd independently from \(\chi(d)\) distribution and apply them to renormalize rows (Or simply \(\sqrt{d}\), but biased)

- random hadamard matrices

- \(\frac1{\sqrt{d}} HD_1 ... \frac1{\sqrt{d}} HD_L\)

- renormalize

- Givens rotations

- also renormalize

- Gram-schmidt orthogonalization approach: